I am a user of SVN and was a fan of it as well. But the following video demonstrated some requirements of SCM which I liked a lot Especially as I want to work distributed, offline, modular and essentially fast GIT came to me as a gift and I want to share it with everyone. This Tech talk from Linus actually convinced me to take a deep look at GIT.

Then this video (which for some reason YouTube does not allow to embed) actually helped me understand more about how GIT works and some tips to work better with GIT.

This image could be helpful enough to have it beside your desk and it also has a larger resolution one if you want it. These images and other documentation are available at the GIT Wiki site. If you are looking for a extended cheat sheet you can find it here. If you are looking for the manual its here and you might also find the "Everyday GIT ..." page helpful. Some useful How-To s can be found here. If you are looking for how to setup a hook, have a look here; you can also simply find those scripts doing locate hooks in your linux box.

I have developed such likings towards GIT to the extend that I have started writing a Maven2 SCM Provider for GIT (using the Mercurial provider as example). Once I am done with it I will also be writing a NetBeans VCS Plugin again in light of the Mercurial plugin (if no VCS plugin for GIT is available in NetBeans prior to that).

Retreiving all classes in a package

Straight to the point - I had to list all ReadOnly attributes for my domain objects as I had to list them in the office Wiki. I had several of them and doing that manually would be tiring (not to mention that I actually do not like documentation that much), I had to find a shortcut and only way is to use reflection; the next question was - how can I just simply mention the package and it will do the rest for me, so after Googling I could not find a solution to my liking so I thought of writing one myself and it took 10~15 minutes to come up with this piece of code.

I did the following with it and it worked nicely for me -

I did the following with it and it worked nicely for me -

javac GenerateProperties.javaIf you are running your program from IDE, if you have several modules in your classpath (i.e. their target/classes/) and/or zip/jar(s) it will also search through them to list the methods. I am thinking of making a small framework, or API, out of it. I would like some enhancements on this code, please feel free to send them to me.

java -cp /opt/jdk1.6.0_01/jre/lib/rt.jar:./ GenerateProperties

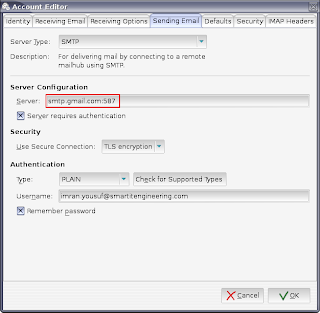

SMTP setting for GMail in Evolution

This post of mine involves the least technical depth probably :), it is mainly about configuring SMTP for GMail with Evolution mail client (not that it could not be figured from the image). As GMail users know GMail does not use the default SMTP port (25), the custom port number has to be mentioned in the configuration and though there is no field labelled as Port, but users can easily set the port number as I did. Please see the red border region for it in the image - server-address:port. If required port number can also be provided in similar fashion for incoming mail server.

Modular project development - with Maven and GIT

For sometime I have been searching for a combination for Multi-Module project development with SCM and Project Build tool. After using and testing some tools I have settled GIT and Maven; actually honestly speaking I have quite impressed by GIT. Though the main repository for my projects will be either CVS and SVN because dev.java.net provides those only but the developers will be requested/encouraged to use GIT (through online collab) as their local/team SCM/VCS. As result of my conviction I have decided to start a plugin for GIT-NetBeans integration as well; Almighty willing. At first I will use it for the DatabaseReport and JAIN SIP adapter projects. Everyone interested can join in any of the 3 projects (including the GIT-NetBeans Plugin).

NetBeans RCP development with Maven

I have been wanting to work with NetBeans RCP for quite some time now, but I felt that resources were lacking; then came along API changes for NetBeans 6.0 Platform and I felt there is a lot of improvement in it, along with its platform home, the excellent RCP book and Maven integration for plugin/suite/Rich Client Application development (I personally tested it whether it works or not and it does smoothly except that when I was reading it Figure 4 and Figure 6 were in opposite places), now I reckon NetBeans RCP as a force. The biggest advantage of NetBeans RCP that actually initially dragged me to NetBeans was the JavaHelp integration.

I just wish NetBeans also support(s)/ed GIT. Actually I am planning to add support for it in NetBeans.

I just wish NetBeans also support(s)/ed GIT. Actually I am planning to add support for it in NetBeans.

Killing a process in Linux

As a software developer one thing I have several times is killing a process, some people will term is as ugly or bad, but general software developers often come across situation where killing a process is handy or necessary. I will mention some use cases later in the blog.

Killing a process usually involves 2 steps. Finding the process ID that is intended to be terminated and terminating it with a kill statement. Step one for general users (like myself) again involves a ps command with some grepping and then manual lookup. I have to these almost every time I kill a process. I have simply put this steps into this script so that I can do them in a single command. If someone has any improvements please inform me about it.

The shell script expects 3 arguments, of which 2 are mandatory and 1 is optional. The first 2 are grep params to identify the process. For example, to identify a Tomcat running Escenic Content Engine I use java and escenic.root to identify the process. The third param is the user to search it with. If it is not provided then whoami will be used to determine the user executing the shell.

This shell script has enabled me to sync, build, deploy my projects all with a single command and this helps me save some sustainable amount of time. Now without a build server I can still achieve what a build server like Hudson can do. Actually I use this script from within Hudson and thus I almost "never" have to be concerned about deploying applications to my demo server. I hope this is useful to all.

Killing a process usually involves 2 steps. Finding the process ID that is intended to be terminated and terminating it with a kill statement. Step one for general users (like myself) again involves a ps command with some grepping and then manual lookup. I have to these almost every time I kill a process. I have simply put this steps into this script so that I can do them in a single command. If someone has any improvements please inform me about it.

The shell script expects 3 arguments, of which 2 are mandatory and 1 is optional. The first 2 are grep params to identify the process. For example, to identify a Tomcat running Escenic Content Engine I use java and escenic.root to identify the process. The third param is the user to search it with. If it is not provided then whoami will be used to determine the user executing the shell.

This shell script has enabled me to sync, build, deploy my projects all with a single command and this helps me save some sustainable amount of time. Now without a build server I can still achieve what a build server like Hudson can do. Actually I use this script from within Hudson and thus I almost "never" have to be concerned about deploying applications to my demo server. I hope this is useful to all.

Guarding against softwares with memory leak

I have been using IntelliJ IDEA as the IDE for my project development. It has been satisfying but sometimes it annoys me a lot. For example, when I am typing in the code editor and all of a sudden I cant hear the music playing (which I always play while coding), the IDE stopped responding and the mouse pointer hardly moves. The only way to start working normally again is to restart my laptop and on a laptop it is not a happy scenario to force reboot and moreover I do not like force reboot as a solution. So first I had to detect what caused the catastrophe and I find that the Java VM running IDEA keeps taking up memory linearly at 45 Degrees angle in the resource monitor. Furthermore this is not a infrequent event it usually occurs 2-3 times a day.

As I could not live with the reboot I had too make sure it did not occur and for that I thought of the a solution - I will have a cron job that will monitor the used memory by IDEA and will kill it if it crosses a certain amount. So I wrote a shell script and created a cron job following the the Ubuntu help. That solved my problem. The shell script can actually be used to kill any Java process that leaks memory and might disrupt the normal flow of PC usage experience.

If anyone has any alternate solution please feel free to share it.

As I could not live with the reboot I had too make sure it did not occur and for that I thought of the a solution - I will have a cron job that will monitor the used memory by IDEA and will kill it if it crosses a certain amount. So I wrote a shell script and created a cron job following the the Ubuntu help. That solved my problem. The shell script can actually be used to kill any Java process that leaks memory and might disrupt the normal flow of PC usage experience.

If anyone has any alternate solution please feel free to share it.

WinRAR on Ubuntu

As a novice Linux and Ubuntu Fiesty Fawn user I have faced some issues related to RAR Archives. WinRAR is the Windows tool widely used for this purpose and it is also a powerful archiver. If one has wine installed as mentioned in one of my earlier blogs they can install WinRAR in no time following this.

I am just mentioning the steps in short.

I am just mentioning the steps in short.

- Download WinRAR 3.7

wine {PATH_TO_EXE}. E.g. wine wrar371.exe.Having Desktop emulation configured using Wine is necessary. winecfg -> Graphics -> Emulate.... checkbox- Run WinRAR with the following command:

wine {PATH_TO_INSTALLATION}/WinRAR.exe

Developing projects that support multiple Databases

It is a common scenario in product/project development that there is a requirement to support multiple databases. This actually leads to 3 challenges - generating DDLs for the DBMS, keeping the queries in Data Access Layer portable across the DBMS platforms and optimizing the read SQLs for the specific DBMS platform. This blog entry will cover only introduce the probable solution to the first of the three challenges.

DDL usually differs from one DBMS to the other. When it comes to generating DDLs there is a issue of creating or altering a table. i.e. when the DDLs are being executed for the first time it should create the database, whereas on consecutive execution it should only execute the alter table table columns to ensure that only the changes are taking effect. There is also the issue of being able to generate the DDLs when required - both the whole DB script and the alter table script. We would also like the script to be generated and executed only on specific cycles of build process.

After searching the internet the tool that caught my eye is the DDL Utils project of the Apache DB Project. This tool fulfils the requirements described above when used in conjunction with Maven. Have a look at the following sample project to see how it can be achieved.

Sample Project

After decompressing the sample project please go to the maven-plugin folder and execute the shell script. Windows users can simply use the same command in it :). As the example uses MySQL please check the connection parameters in the /src/main/resources/com/smartitengineering/.../schema/

db-connection-params.properties.

The project is build around maven and thus the DB operations are also attached with the Maven commands compile, install and deploy. Their outcomes are as follows:

DDL-Utils Ant Tasks

Schema Documentation

In order to get the deploy working you will need a valid repository to deploy. So that is the only thing that needs to be edited in the POM file.

All in all the tools is very useful handy when it comes to DDL generation and history maintenance. The only thing I found missing in the tool's release is support of ON UPDATE/DELETE CASCADE/RESTRICT/.. support. Though the schema supports it, the implementation does not support it; but considering the whole this is quite a useful tool. The good news is that the source code in the SVN has support for it during creation only and not in altering, so we can hope to see it soon as well

DDL usually differs from one DBMS to the other. When it comes to generating DDLs there is a issue of creating or altering a table. i.e. when the DDLs are being executed for the first time it should create the database, whereas on consecutive execution it should only execute the alter table table columns to ensure that only the changes are taking effect. There is also the issue of being able to generate the DDLs when required - both the whole DB script and the alter table script. We would also like the script to be generated and executed only on specific cycles of build process.

After searching the internet the tool that caught my eye is the DDL Utils project of the Apache DB Project. This tool fulfils the requirements described above when used in conjunction with Maven. Have a look at the following sample project to see how it can be achieved.

Sample Project

After decompressing the sample project please go to the maven-plugin folder and execute the shell script. Windows users can simply use the same command in it :). As the example uses MySQL please check the connection parameters in the /src/main/resources/com/smartitengineering/.../schema/

db-connection-params.properties.

The project is build around maven and thus the DB operations are also attached with the Maven commands compile, install and deploy. Their outcomes are as follows:

- mvn compile - Generates the DDL for the whole schema regardless of the current DB state

- mvn install - Generates the DDL required to sync the current schema to the DB; that is generates the minimum possible alter table statements

- mvn deploy - Execute the alter table ddls on the DB to sync with the latest state

DDL-Utils Ant Tasks

Schema Documentation

In order to get the deploy working you will need a valid repository to deploy. So that is the only thing that needs to be edited in the POM file.

All in all the tools is very useful handy when it comes to DDL generation and history maintenance. The only thing I found missing in the tool's release is support of ON UPDATE/DELETE CASCADE/RESTRICT/.. support. Though the schema supports it, the implementation does not support it; but considering the whole this is quite a useful tool. The good news is that the source code in the SVN has support for it during creation only and not in altering, so we can hope to see it soon as well

Getting Intel 82801G to work in Fiesty Fawn

My new laptop is a Acer Aspire 5585WXMi. It ships with ALC883 Intel HDA chipset. I could get everything to work with Ubuntu Fiesty Fawn except for my sound card and it was so sad that I could basically work with everything excepting for sound.

After trying a lot of things all of a sudden it started to work. So I started looking into why and how. I noticed that I have ALSA 1.0.15rc1 installed from the source code; I did that basically as instruction provided from the Ubuntu help but the wiki alone did not solve the problem then. Later I have installed alsaplayer-alsa, alsaplayer-gtk, alsa-tools and alsa-tools-gui packages and ran alsaconf again. Used the ALSA mixer to turn the PCM channel on and set full volume and then all of a sudden hurrah! there is sound in my laptop. But I do not think that upgrading to ALSA 1.0.15 is required it could be achieved in 1.0.13 as well if the additional packages are installed but that is my hypothesis, I am not absolutely certain. I hope this works for others as well. If it does let me know.

After trying a lot of things all of a sudden it started to work. So I started looking into why and how. I noticed that I have ALSA 1.0.15rc1 installed from the source code; I did that basically as instruction provided from the Ubuntu help but the wiki alone did not solve the problem then. Later I have installed alsaplayer-alsa, alsaplayer-gtk, alsa-tools and alsa-tools-gui packages and ran alsaconf again. Used the ALSA mixer to turn the PCM channel on and set full volume and then all of a sudden hurrah! there is sound in my laptop. But I do not think that upgrading to ALSA 1.0.15 is required it could be achieved in 1.0.13 as well if the additional packages are installed but that is my hypothesis, I am not absolutely certain. I hope this works for others as well. If it does let me know.

Bridging SIP Servlets and JAIN SIP

As discussed in one of my earlier blogs, there are 2 SIP stacks that can be used to build SIP applications - SIP Servlet API and JAIN SIP API. Both have their advantages and disadvantages. For example, JAIN SIP API provides more structured way of building SIP Applications and could be used for any of the SIP entities. But SIP Servlet is more suitable for server side SIP entities; saying this SIP Servlet has the advantage of leveraging enterprise services more directly as it is integrated with Java EE containers.

As I am planning to develop a VVoIP framework I would like to leverage strength of both. So I have decided to develop a bridge (or more appropriately adapter) between the APIs and that initiative have resulted JAIN SIP API Adapter project. The project's aim is to let JAIN SIP API based Server side applications benefit from JAVA EE Services by using SIP Servlet API. I hope to complete this project in 3 months to come and release a beta version. Initially the system will be tested on SailFin with a IM server I developed based on JAIN SIP API.

As I am planning to develop a VVoIP framework I would like to leverage strength of both. So I have decided to develop a bridge (or more appropriately adapter) between the APIs and that initiative have resulted JAIN SIP API Adapter project. The project's aim is to let JAIN SIP API based Server side applications benefit from JAVA EE Services by using SIP Servlet API. I hope to complete this project in 3 months to come and release a beta version. Initially the system will be tested on SailFin with a IM server I developed based on JAIN SIP API.

Video Recording using Flash

Recording Video using Flash is a trend on the high. YouTube Quick Capture is one that has caught our eyes on this. So I was trying to get something done in the same way. I am basically Java developer and have experience of developing Video Recorder using Java Media Framework. Though I am a Java fanatic I feel that at present Flash is the way to Video on Internet. Its adaptation in video technology is very wide spread and well acclaimed. This blog is about is about video recording.

The first thing that is required for Flash video recording is a media server for streaming; because Flash unlike signed applet can store on local disk, it in fact streams the captured video to media server. There is Adobe Media Server or Flash Communication Server that we can use after procuring or there is Red5; thanks to the open source community. Now with Red5 comes a sample simple video recorder, using which you can simply stream video to the server. If we look at a customized Simple Recorder (it is only the recorder and there is no attached streaming server :) if anyone can help please let me know) we will find that for sole recording purpose there is a video container that shows the video. Initially it shows the currently being captured video and once the recording is stopped it shows the streamed video of the just recorded stream. This events are triggered by the buttons on SWF video. It uses the oflaDemo Red5 Application provided as demo with the server. All streamed videos are stored in {RED5_HOME}/webapps/oflaDemo/streams/.

Now lets look at the code used for this purpose. The first task is to open an stream when we intend to record something.

The first thing that is required for Flash video recording is a media server for streaming; because Flash unlike signed applet can store on local disk, it in fact streams the captured video to media server. There is Adobe Media Server or Flash Communication Server that we can use after procuring or there is Red5; thanks to the open source community. Now with Red5 comes a sample simple video recorder, using which you can simply stream video to the server. If we look at a customized Simple Recorder (it is only the recorder and there is no attached streaming server :) if anyone can help please let me know) we will find that for sole recording purpose there is a video container that shows the video. Initially it shows the currently being captured video and once the recording is stopped it shows the streamed video of the just recorded stream. This events are triggered by the buttons on SWF video. It uses the oflaDemo Red5 Application provided as demo with the server. All streamed videos are stored in {RED5_HOME}/webapps/oflaDemo/streams/.

Now lets look at the code used for this purpose. The first task is to open an stream when we intend to record something.

var nc:NetConnection = new NetConnection();The second thing that we can do is detect and select the capture devices.

// connect to the local media server

// rtmp://{host}:{port}/{Red5_application_context}

// default port is 1935

nc.connect("rtmp://localhost/oflaDemo");

// create the netStream object and pass the netConnection object in the

// constructor

var ns:NetStream = new NetStream(nc);

// get references to the camera and micNow above we selected the default devices but we could Camera.names to get the names of the capture devices and select the one that we want; this is something that YouTube does :). Next we need to configure the video capture stream.

var cam:Camera = Camera.get();

var mic:Microphone = Microphone.get();

// setting dimensions and framerateNext we need to add the device streams to the capture stream.

cam.setMode(320, 240, 30);

// set to minimum of 70% quality

cam.setQuality(0,70);

//Setting sampling rate to 8kHz

mic.setRate(8);

// This FLV is recorded to webapps/oflaDemo/streams/ directoryAfter that we need to add the camera to the container and publish the video.

// attach cam and mic to the NetStream Object

ns.attachVideo(cam);

ns.attachAudio(mic);

// attach the cam to the videoContainer on stage so we can see ourselvesNow once the recording is just clear the video container and the captured stream to the container and specify the stream to play the just recorded video

videoContainer.attachVideo(cam);

// Publish the video and mention record

// lastVideoName is the name of the video and it will be saved as ]

// lastVideoName.flv

ns.publish(lastVideoName, "record");

// attach the netStream object to the video objectHopefully with these steps a video recording and stream playback should be possible.

videoContainer.attachVideo(ns);

// play back the recorded stream

ns.play(lastVideoName);

Stickies on Ubuntu

It seems that Wine is an excellent tool to run Windows application on Ubuntu. Recently I had to use Stickies and tried it on Ubuntu. So first I had to install Ubuntu. I executed the following commands to install Wine on Ubuntu 7.04 Fiesty.

scroll down to Install Note and follow that. The only change will be installing the MSI file, instead of the one mentioned there use the following to install the MSI file

Now try to execute

It might say that MFC42.dll is missing, if it says so please get it from a Windows installation and copy it to/.wine/drive_c/windows/system32/ and try to execute it again.

Hopefully that will be enough for Stickies Installation :).

Now to get the Stickies running you can execute the following command:

sudo wget http://wine.budgetdedicated.com/apt/sources.list.d/feisty.list -O /etc/apt/sources.list.d/winehq.listFor other platforms you can have a look at http://www.winehq.org/site/download. Then you have to simply download the stickies.exe and then execute

sudo apt-get update

sudo apt-get install wine

wineBut hold on the installation is not done, you need get the following the installation steps mentioned in{PATH-TO-STICKIES}/stickies.exe

http://appdb.winehq.org/appview.php?iVersionId=5169&iTestingId=4356

scroll down to Install Note and follow that. The only change will be installing the MSI file, instead of the one mentioned there use the following to install the MSI file

wine msiexec /i/{PATH-TO-MSI}MSISetup.MSI

Now try to execute

wine/.wine/ {PATH-TO-STICKIES-INSTALLATION}/stickies.exe

It might say that MFC42.dll is missing, if it says so please get it from a Windows installation and copy it to

Hopefully that will be enough for Stickies Installation :).

Now to get the Stickies running you can execute the following command:

sudo wine ~/.wine/drive_c/Program\ Files/stickies/stickies.exe > {PATH-TO-LOG}Though this is for Ubuntu but I guess excepting the installation procedure rest should be same across Linux platform. Let me know if any further changes were required.2>&1 &

SIP application structure

As mentioned in my previous blog I feel that SIP applications are the next generation of applications for Desktop, Web and Mobile. By the will of Almighty, today I will be discussing the structure of SIP applications.

We will see the main layers of the application and discuss them in bottom up approach. Any SIP application will contain 3 layers. Topmost is the Application Layer - where all the logics of the application resides and which takes care of the SIP packets. Then comes SIP Stack it self, which is responsible for abstracting the SIP packets from the application developer by providing an API to do the low level SIP stuffs. Lastly comes the Transport layer that is responsible for carrying the SIP packets and sending them to the intended destination; the SIP stack is very closely binded with this layer as it will provide transport for common protocols and developers has to extends the transport API to add more transport layer protocols.

Almost any transport layer protocol can be used with SIP as long as the SIP stack recognizes it. The most commonly used transport layer protocol is UDP; on some occasions TCP and TLS are also used. We all know that all networks are converging towards IP backbone and as those protocols are IP based it should be more convenient for developers in future as they will not have to write SIP transport layers for different protocols. One challenge would be from Cell Phone to BTS; so SIP stack providers for Cell phone will have to implement transport layer for GSM and CDMA phones.

IMHO the most important layer of the application structure is SIP stack. This will abstract the SIP packets and Transport layer hassles from the developers and will provide them API's to call and use them to make an SIP application. There are quite a few SIP stacks available such as reSIProcate, Jain SIP (JSR-32) and SIP Servlet (JSR-000116). Among them SIP Servlet Application (archives with sar extensions) is usually deployed in Java EE Container (BEA Weblogic, IBM Websphere, GlassFish-SailFin) or SIP Container implementing SIP Servlet. Personally I have used Jain SIP and its simplicity in usage and good API structure has created liking in me for it. In my blogs related to SIP I will be using this stack to develop examples. I will also discuss about SailFin.

Application layer is where everybody gets to exercise their innovations. Now is the time to generates SIP centric ideas and implementing them. All SIP application can be categorized in four categories -

- Registrar Server - A server responsible to register a SIP user and its location

- Proxy Server - A server responsible to act as the main server where as it is nothing but a proxy and knows which is the real server

- Redirect Server - A server that redirects request to another server; an example of this functionality would be load balancing of requests

- User Agent - This is a client side entity responsible for communicating with the server and server the Client's request. Anything could be user agent such as PC Software, Cell Phone, Refrigerator, Television, Microwave Owen etc.

By the will of Almighty, I will soon make some postings about what could be SIP applications.

SIP - For next generation communication

I have been introduced to SIP (Session Initiation Protocol) from my college courses. From then it appealed a lot to me. As timed passed by I came to realize that with the rate in which everything is getting globalized it is a matter of time when VoIP will rule the world of communication. As internet is already accepted as the global information highway, communication is also bound to be built on that. SIP is IETF engineered protocol and open for everybody's suggestion.

Recently SIP related activities are very visible; especially, with reSIProcate, Jain-SIP protocol stacks popularity and major Java EE Container vendors supporting SIP Servlets it is easily comprehensable that industry is expecting a increase in use of SIP in business applications, as more and more applications want to include web based voice/video chat.

Recently, GlassFish project has introduced the SailFin project, which is a SIP Servlet container. Hopefully, by the will of Almighty, it will also include support for easy portability for Jain-SIP based server applications into its container as well.

If I were to bet on a technology of next generation I would place my bet on SIP based applications. Hopefully, by the will of Almighty, I hope to bring more writeups about SIP applications - mostly server side.

Recently SIP related activities are very visible; especially, with reSIProcate, Jain-SIP protocol stacks popularity and major Java EE Container vendors supporting SIP Servlets it is easily comprehensable that industry is expecting a increase in use of SIP in business applications, as more and more applications want to include web based voice/video chat.

Recently, GlassFish project has introduced the SailFin project, which is a SIP Servlet container. Hopefully, by the will of Almighty, it will also include support for easy portability for Jain-SIP based server applications into its container as well.

If I were to bet on a technology of next generation I would place my bet on SIP based applications. Hopefully, by the will of Almighty, I hope to bring more writeups about SIP applications - mostly server side.

Subscribe to:

Comments (Atom)